Tiers of speed in digital products

In the chase of speed, time to market, what would you sacrifice?

In the chase of speed, time to market, what would you sacrifice?

I would bet quality is not the one thing coming to your mind. Still, as it is a hard to define concept, complicated to represent in metrics, it is often one of the casualties.

Over long years of experience, I recognized a pattern. It is not that anyone have an intention to make low quality products. It is more about inexperience how they are made, or even more often, what does it take to engineer quality software.

Though over-engineering is a problem in itself, I regard that not as an increase in quality. Quite the opposite. It may even reduce quality.

Quality is real personal. Not an objective marker, still, many of us “feels” what quality is, when we meet it. This feel is hard, but not impossible to translate to metrics. Much harder to connect the change in those metrics to the effort given. Worth another post.

We know, iterating fast is good. The feedback loop works faster, we learn more, we can adjust the product to the needs of our customers.

However the different disciplines that need to work in unison progress at vastly different speeds. At least, if you want a resemblence of quality.

Having new business ideas, feature ideas, monetization hooks, a different copy to convince prospects to convert can be drafted up in moments. Working them out to a passable degree can be very fast, measurable on the hours to days horizon. Then validate the next idea in a day.

Drafting up a prototype, working on a branding style and showing something promising. Even something to the users and do test and interviews can be fast, with quality. A practiced UX designer can do it on the days to week horizon. Then iterate to the next prototype in another week or faster.

However, to put the above ideas to work in an actual software, may be on the weeks to months horizon. How is that? The prototype seems to be working just as fine and it was done in days.

Don’t laugh on the naiv thought, we all have similar ones in expertise areas we are not familiar with.

Instead, approach constructively. Why engineering seems to move “slow” and how fast can it be?

The first issue is the size of the problem/solution space. Imagine a coordinate system and draw a little circle around the zero. just 1 unit wide. This may be the problem space the business idea considered or had to solve for to prove viability.

Then a circle around the zero, but with 10 unit radius. This may be the amount of problems the UX design considered, involving new angles like user understanding, feedback on outputs, even progress bars and disabled buttons and their state transitions are thought of on this step.

Now add a circle with 1.000 unit radius. This is what engineering needs to consider. While we simplified a lot our own lives by using frameworks and packages so we don’t need to solve the whole circle ourselves every day, we still have plenty to consider as engineers.

How the button is disabled, does it show a tooltip anyway? Is it clicked or not, hovered, hover left, right click, does it navigate, what happens if opens a dialog on top of a dialog, etc. And just about a single button. Consider complex background processes handling your credit card information or issueing your train ticket.

What you saw as a user, and did, is just entered a few data point on a form. What happens in the background is a choreographed dance of dozens if not hundreds of functions with thousands of codes representing stages, checks and validations. Is your seat actually free to book. Did the payment arrive and booked. Issuing a complex identifier that is unique to you and conductor can check, a.k.a. QR codes.

What engineers do, is a logical excercise at the beginning: carve out areas of the problem space and solve for the distinct parts of a now Venn diagram. And transtition between the stages. (Engineers, think twice, how a simple if divides the problem?)

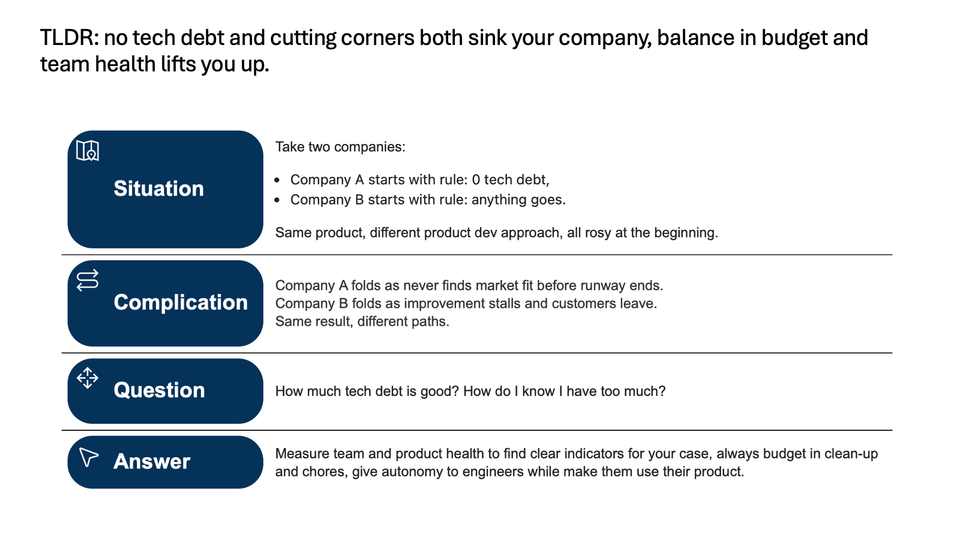

And back to quality versus speed. The problem is, you can easily push for speed in engineering. What is not easy to see, only experience will teach you: when doing so, quality is sacrificed.

Either maintainability is sacrificed: documentation not written, testing not automated, no protection from future mistakes is added.

Either stability is sacrificed: crashes, errors for unknown reasons, system recover fails, no backup data or can’t recover.

Either security is sacrificed: gaping data leaks, easy to break systems, risking huge legal fees and brand damage.

Either scalability is sacrificed: both human and technical scalability, spaghetti code can’t be worked on paralelly even for seemingly unrelated features, waste of resources during scale up or hard-limit in scaling, like database horizontal scaling is impossible with some architectures.

Or all of the above are sacrificed. And you may not even know about them at the time.

The only reliable solution I saw working: make a conscious decision of effort investment in above dimensions: speed vs quality vs scope (where quality = maintainability + stability + security + scalability).

Cutting the scope, a.k.a. breaking up the business idea into distinct pieces is the greates lever you can pull here. This is the only thing that directly affects the size of the problem/solution space. The lower that space, the less effort is necessary for a quality implementation.

How do you approach this equation? How do you think it relates to the cost-speed-time triangle so prevalent in management?